In 2016, as I was preparing to write my “Why Hillary Will Win” piece, I decided to have my able then-assistant, David Byler (now of Washington Post fame), do a bit of research. His job was to look up the share of the electorate that pollsters were anticipating for whites without college degrees and for African Americans.

What he found put an end to the piece. It seemed a big bet was being placed on 2012 levels of black turnout occurring in 2016 and, more importantly, that pollsters were badly underestimating turnout for whites without college degrees. In previous years, that hadn’t really mattered – whites with and without college degrees voted Republican at roughly the same levels. Underestimating the share of whites without college degrees and overestimating whites with college degrees wouldn’t have mattered in 2012 or 2008, because their votes were fungible.

On a hunch, I went back and looked at the poll errors for 2013-15, and it became apparent that the errors for 2016 followed much the same pattern: They were concentrated in areas with large numbers of whites without college degrees. Indeed, the size of the poll error correlated heavily with whites-without-college-degree share (p<.001); you could explain about one-third of the difference in the size of poll miss just from knowing the share of the electorate that was whites without a college degree.

We all know what happened next. Trump surprised observers by winning states that Republican presidential candidates hadn’t carried since Debbie Gibson and Tiffany fought it out for top placement in the Top 40 charts. The misses were particularly pronounced in the Midwest.

Most pollsters attributed the misses to the failure to weight by education, and when one brings up the errors from 2016 with respect to the 2020 election, the answer typically is “pollsters now weight by education, so they’ve fixed it.”

But have they? We actually have a pretty nice sample from 2018 to draw upon. If pollsters have really figured out where they went wrong in key states in 2016, we should see a marked improvement over 2016 and 2014.

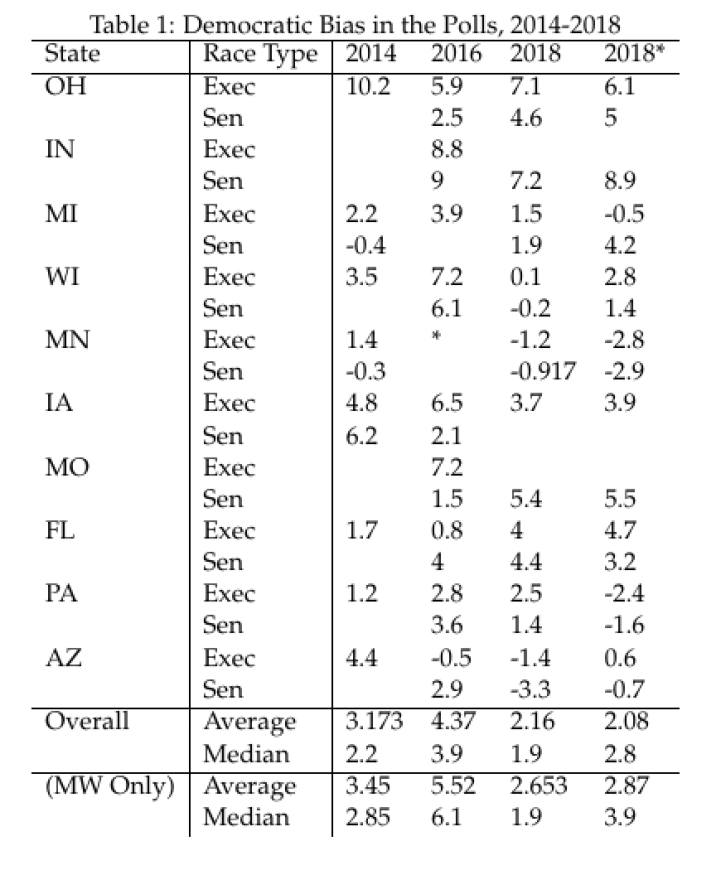

So, I went back and looked at the Democratic bias in the polls for swing states in 2014, 2016, and 2018. I could not use North Carolina, since there was no statewide race there in 2018. One problem I encountered is that in 2018 many states were under-polled, so RCP didn’t create an average. I’ve gone back and averaged the October polls for those states, if available (note that we don’t have three polls in October for Minnesota in 2016, hence the asterisk there). As a check on this approach, I’ve also included the error from the 538 “polls-only” model for 2018.

The results are something of a mixed bag, but overall it isn’t clear that the pollsters have really fixed the problem at all. While the bias toward Democrats was smaller in 2018 than in 2016, the bias overall was similar to what we saw in 2014, especially in the Midwest. If people remember, the polls in 2018 suggested that we should today have Democratic governors in Ohio, Iowa and Florida, and new Democratic senators in Indiana, Missouri and Florida. Obviously this did not come to pass.

Moreover, almost all of the errors pointed the same way: Republicans overperformed the polls in every Midwestern state except for Minnesota Senate/governor and Wisconsin Senate (none of which were particularly competitive). This is true, incidentally, across the time period: We see marginal Democratic overperformances in the Michigan and Minnesota Senate races in 2014, but otherwise pollsters have consistently underestimated Republican strength. Note that if we had added the competitive Senate race in North Dakota and the governor race in South Dakota in 2018, we’d also see Republican overperformances of a couple of points.

Outside of the Midwest the polling improvements were a mixed bag; Florida was worse than it had been in 2014 or 2016, Arizona was much better, and the uncompetitive races in Pennsylvania were something of a wash (people forget that the Pennsylvania polling in 2016 really did suggest a tight race).

At the same time, we should keep in mind that predicting poll errors is something of a mug’s game; pollsters change techniques from year to year, and they do learn. In 2014 there was something of a fight among elections analysts regarding whether we should expect an error in the Republicans’ direction in that year, given the results in 2012 and 2010. As I said in then:

The bottom line, I think, is that it is difficult to translate these observations into a prediction. It is one thing to say, “There may have been skew in the previous two cycles.” It is quite another to say, “On the basis of this, we can predict what will happen in the following cycle.” … After all, the claim here is not simply that the polls may be skewed. The claim is that the polls may be skewed in a Democratic direction in this year.

In other words, to take this seriously, you have to take it as a prediction. The problem arises when you ask the question: How can we make this prediction reliably, e.g., with some sort of methodology and based upon actual evidence?

So, the point here is not that we should expect that polls in the Midwest or Florida will be biased against Republicans in 2020. They may well not be. We should also keep in mind that the Upper Midwest was under-polled in 2016, and that will not be the case this year. Instead, the point is that we should remain open to the possibility that this can still happen, and not take at face value assurances that pollsters have fixed this problem.